When setting up a modern data stack, data warehouse modeling is often the very first step. It is important to create an architecture that supports the data models that you wish to build. I often see people going straight to writing complex transformations before thinking about how they want to organize the databases, schemas, and tables within their warehouse. To succeed, it is key to design your data warehouse with your models in mind before starting the modeling process.

What is data warehouse modeling?

Data warehouse modeling is the process of designing and organizing your data models within your data warehouse platform. The design and organization process consists of setting up the appropriate databases and schemas so that the data can be transformed and then stored in a way that makes sense to the end user.

When data warehouse modeling, it is important to keep in mind the specific access controls and separation of environments. You need to consider which users have access to certain databases, schemas, tables, and views. You also need to remember to keep your development and production environments separate but similar in how they function.

Who is involved in data warehouse modeling?

While responsibilities vary for data engineers, analytics engineers, and data analysts depending on your team, here is what I’ve experienced in my current and previous roles.

First, data engineers will ensure data is being properly recorded by front and backend processes on the website and with different systems used. Ensuring this raw data is being captured is necessary to collect raw data to ingest into your warehouse. Rather than directly creating a BI architecture, which is more of an analytics-focused role, data engineers ensure that the right processes are in place so that analytics teams have the data they need.

Analytics engineers, like me, focus on writing dbt data models that help to transform the raw data. However, they can also be in charge of setting up the data warehouse depending on the organization and the size of the data team. Because the analytics engineer is working so closely with the transformed data, it often makes sense for them to decide on a structure for the models they are building. Because dbt itself is so intertwined with data warehouse modeling, it can be easiest to have the same person work on these two things.

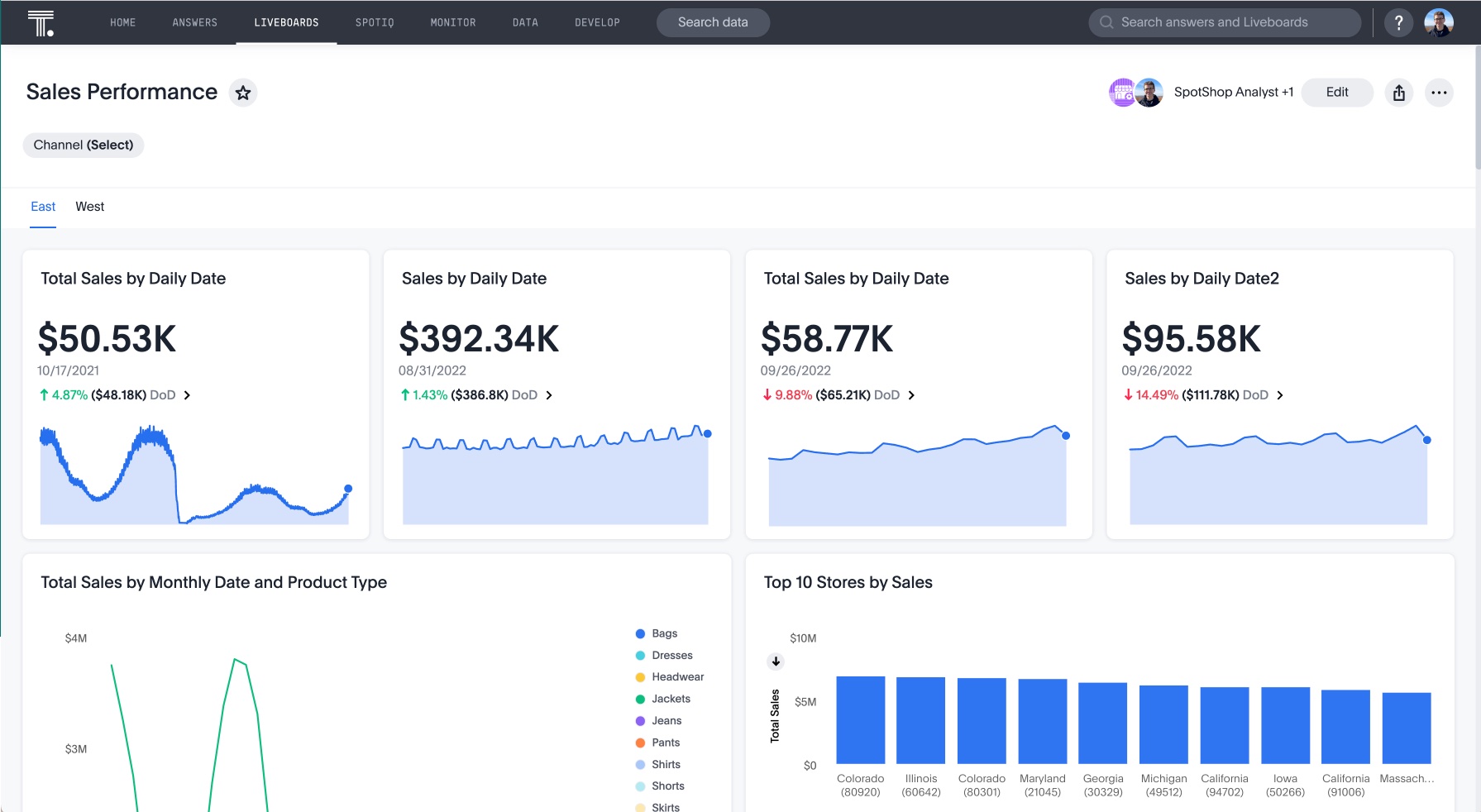

The data analyst then utilizes the models built by the analytics engineer. They use these models to power dashboards and reports that are then used by business stakeholders. Self-service analytics tools like ThoughtSpot empowers front-line decision makers by providing interactive data analytics. These stakeholders typically represent different domains of the business such as sales, marketing, growth, and product. Depending on the domain, they’ll use these visualizations to make decisions on a campaign to create, a strategy for retaining customers, or what product to restock next.

For example, check how ThoughtSpot Liveboards help sales leaders evaluate team performance.

Start your 14-day free trial to see how ThoughtSpot can help you get the most value out of your data stack investment.

3 types of models to include in your data warehouse

Now, what does data warehouse modeling entail? There are three main types of data models, made popular by the use of dbt. Each of these models serves a different purpose and is set up differently within the warehouse. We will discuss what each of these is for and where they should sit in your data warehouse.

Base or staging models

Base models (or staging models as dbt now calls them) are views that sit directly on top of your raw data. They include basic casting and column renaming to help keep your standards consistent across different data sources. It is in these models that you decide the type of timestamps to use, how to name date fields, whether to use camel or snake case for naming your columns and how to define primary keys. Base models are instead referenced rather than the raw data tables by data analysts in their reports and dashboards.

Here’s an example of a base model I would write in dbt for an orders table:

SELECT

Id AS order_id,

OrderDate::timestamp_ntz AS ordered_at,

CAST(orderDate AS date) AS order_date,

CustomerId AS customer_id,

OrderTypeId AS order_type_id

FROM {{ source(‘company_db’, ‘orders’) }}Here I am redefining the names of my columns so that they are in snake case. I am casting the timestamp column to be a specific timestamp type and also changing the naming convention to end in at. As for the date column, that is being properly cast to a date and following the naming convention of ending in date. Now, the reader of the model will be able to better identify which column is a timestamp and which is a date.

Even though I am transforming my data, the data itself stays the same. There are no joins, aggregates, or calculations in base models. The table’s dimensions and number of columns should be identical to that of the raw data table it is referencing.

Intermediate models

Intermediate models are important when using a tool like dbt that helps make your data transformations modular. The purpose of intermediate models is to speed up the time it takes for your data models to run and make it easier for analytics engineers to debug more complex models.

Intermediate models sit between base and core models, acting as a liaison between the two model types. While they aren’t usually accessible to anyone besides the analytics engineer that wrote them, they are key in the transformation process. They make it so the same code does not need to be repeated in multiple core data models, but can instead be run once and referenced downstream.

These models only reference base models rather than raw data or core models. Because they are a means to an end and not a source or core model, analysts don’t have access to use them in their visualizations. Luckily, data warehouses like Snowflake, Google Big Query, and others make it easy to restrict access to certain schemas so that they can’t be used in final analyses or reporting.

An intermediate model may look like this:

SELECT

Anonymous_sessions.session_id,

Anonymous_sessions.visitor_id,

Customer_visits.customer_id

FROM {{ ref(‘anonymous_sessions’) }} anonymous_sessions

LEFT JOIN {{ ref (‘customer_visits’) }} customer_visitsThis data model is mapping anonymous sessions back to a customer_id. This type of mapping is important to understand which web sessions are from which customers, or maybe users that aren’t customers at all. A model like this would most likely be used in multiple core data models, making it one that we would want to exist independently.

Core models

Core models are your data models that produce a fully transformed dataset that can be used by data analysts and business stakeholders. They are the final product in your transformation process! Core models reference base models and intermediate models to produce a final dataset. They can be simple dimension tables that join related base models or complex data models with lots of underground logic.

A core model may be simple like this:

SELECT

Orders.order_id,

Orders.ordered_at,

Order_types.order_type_name,

Products.product_name

FROM {{ ref(‘orders’) }} orders

LEFT JOIN {{ ref(‘order_types’) }} order_types

ON orders.order_type_id = order_types.order_type_id

LEFT JOIN {{ ref(‘products’) }} products

ON orders.product_id = products.product_idHere we are joining base models with their corresponding mapping base models to get more information about the names of certain ids in the data. This can be helpful to do in your data warehouse so tables don’t need to be joined directly within a report or dashboard. This is also something that a data analyst would do a lot to get the information they need, so it makes sense to create it as a core model!

How to organize these data models into different databases

How should you organize your base, intermediate, and core data models within your databases? While this is a matter of personal preference, here’s what I’ve found works for me.

Base database

First, I wanted to store all of my base models in their own database. I created one called “BASE” which I then separated by different schemas, one for each data source. So, for example, there is a schema for Shopify, Facebook, the company’s backend database, SFTP, etc. This mimics the exact structure of my RAW database where I ingest all of my different data sources. Remember, base models mimic your raw data tables, so it makes sense that the organizational structure would also mimic that of the database where the raw data is stored.

Because base models exist as views within your data warehouse, they will be referenced by both development and production models. You don’t need to have the same base tables in both development and production databases. Because they are used by both environments, it’s important to separate them into their own database with schemas for each data source.

Development database

Next, we need a place to store our intermediate and core models that are in the development process. When using a data transformation tool like dbt, it’s imperative to test code and see what it produces when applied to your data sources. Having a development database is key in testing your models and ensuring they perform as expected before moving them to production.

Within your development database, I recommend having two separate schemas — one for intermediate models and another for your core models. It’s important to keep these types of models separate so you know which ones to use in your end analysis. Remember how I mentioned earlier that you will also want to restrict access to the intermediate models? Storing intermediate models in their own schema will make this process a lot smoother. You’ll be able to control access on a schema level rather than configuring it for every model.

Production database

Lastly is your production database. This database is the most important because it is the one that everyone else on your team will be using to access your data models. It is the place they turn to for high-quality, trusted data. Your production database should mimic the same structure as your development database. This means it should have two schemas: one for intermediate models and another for core models. By having the same structure, you can ensure your models will behave as expected after testing in development.

Benefits of data modeling in the cloud

When performing data warehouse modeling in the cloud as compared to on-prem, you have many more features to use to your advantage. With data modeling tools like dbt and cloud data platforms such as AWS Redshift, Databricks, Snowflake, or Google Big Query, you can build your data warehouse with incremental models which allow you to only run your transformation code on new data, saving you on compute costs and run time.

Cloud data warehouses also allow you to take advantage of features like views, rather than needing to create a table that would take up space and be more expensive to maintain. Because views only sit on top of other tables, you only pay for the query you run on them rather than the storage space. This helps to reduce costs and keeps your warehouse data clean through the standards you put in place with base models.

Lastly, cloud data platforms provide elastic compute allowing you to dynamically scale your resources up and down. Snowflake allows you to change your warehouse size depending on how fast you need your data models to run. If you find it too expensive, you can always size them down. This ability adds a lot of flexibility and ensures your infrastructure can grow as the size of the business grows.

The impact of data warehouse modeling

When data warehouse modeling, you need to build your architecture with base, intermediate, and core models in mind. Base models are necessary to protect your raw data and create consistent naming standards across different data sources. Intermediate models act as the middleman between base and core models and allow you to build modular data models. Core models are the final transformation product utilized by the data analyst.

It’s important to plan and create the databases and schemas within your data warehouse before you begin the modeling process. Finding a system that works for you will allow you to build powerful models that are effective in delivering insight to your organization’s business teams. These models have the power to change the data culture within your organization by the standards you put in place in your warehouse.

Madison Schott is an analytics engineer for Winc, a wine subscription company that makes all its own wines, where she rebuilt its entire modern data stack. She blogs about analytics engineering, data modeling, and data best practices on Medium. She also has her own weekly newsletter on Substack.