As I woke up last week to the reality of our $248M Series E funding, I couldn’t help but look back at the amazing adventure that last seven years have been. We brought search to the analytics market for the first time, helped enterprises around the world transform how they use data, and furthered our mission of building a more fact-driven world.

While I was reminiscing, I was wondering what does it all mean? How should we interpret this new reality?

A Platform Approach from the Beginning

Often, when you are trying to build a product that is ahead of its time, the necessary ingredients just don’t exist, and in order to make the dream a reality, you have to invent a new set of components or a platform on which the new product gets built.

Ford wanted to build the most economical cars, and ended up inventing the moving assembly line. When Elon Musk was contemplating building Tesla, his vision was to build the car of the future, but in order to build it, he had to innovate in the battery technology. When Google was trying to organize the world's information, building the world’s largest data centers and the software stack that could scale to hundreds of billions of documents became a necessity – and out came technologies like GFS, MapReduce, Bigtable and software containers that became key ingredients for the future of computing.

When you are building a startup with a grand vision, but also with the realities of limited funding and runway, it’s not an easy decision to build a big platform that is not even the first product that you plan to sell. Often the right decision is to live with a compromise, and build a Minimum Viable Product (MVP) so that you can be quick to market, prove some value.

Choosing the Blue or the Red Pill?

If you are crazy enough to take the red pill instead of blue pill, the chances are that you will fail to launch before money runs out. The valley is full of such stories. But every once in a while you come out the other side swinging. And that’s when you know you have something of enduring value, something that will challenge the status quo of the industry and inspire a whole new generation of products.

Here at ThoughtSpot, we are not at all close to that point where we can declare we’ve accomplished such a feat. In fact, internally, we broadly believe that we are only 2% done. But in my humble opinion, this milestone for ThoughtSpot means that we have a pretty decent shot at choosing the red pill, and making it work. The huge bet we made on building a massively scalable platform, which was required to build the analytics and AI offering of the future, has been de-risked. We have all the fuel we need to go build a company of lasting value on top of this platform.

Where It All Started

If you are not familiar with the ThoughtSpot platform, you may be wondering what the hell I’m talking about, so let’s go back in time. About seven years and 3 months to be precise.

We had just founded ThoughtSpot with the goal of building a product that will allow any business user to derive valuable insights from data at a massive enterprise scale without requiring technical training.

We realized that current products in the industry lacked two important components:

An interface that was usable by any curious business user and did not require sitting through training for 3 days.

A system that could scale to 100s of billions of records (which is no longer uncommon in large enterprises) and provide responses at the speed of thought.

Search Interface

When we began, we knew we needed an interface that would allow any business user to express often complex analytical intent. We iterated over multiple interface choices but ultimately converged on a search-like interface because almost everyone who uses computers is familiar with search as a paradigm for asking questions and getting information. We also found it far easier to express complex analytical intent using search than some point/click/drag interface. For example, imagine trying to express a query in a typical query builder UI for “growth of sales for iPhone vs. iPad”.

The challenge is that this is not a typical search. Unlike Google, we are not literally searching for the words the user typed. Rather, we are searching the billions of tokens in the data to contextualize the user's input and convert it into a well-formed SQL query. Also, while Google can happily provide 10 blue links and let users pick the right answer, a business application like this always needed to give one precise answer that the user could trust. To complicate things further, most enterprises have a language of their own with their specific KPIs, business entities, product names, and other jargon, and we did not want to build a product that would take months of consulting work to make it usable. We thought about leveraging something like Apache Lucene, couple it with some query templates, and perhaps an natural language processing library, but the result would have been too limiting to be of any use to any enterprise.

We came up with the following approach:

A large distributed system that can index every possible entity inside a database, including all the values in every cell, and search through billions of those entities for an exact match, a partial match, phonetic match, or even a partial misspelling match. We designed this system to be able to respond in under 10 millisecond, so that we could search for 10 to 100 different string fragments of a question at the same time and still support and end to end latency of under 200 ms for auto complete suggestions and query compilation.

A dynamic compilation and auto completion engine that can work with an automatically generated DSL with billions of tokens based on the customers database. Also, a Graph engine that understand Schema, Join Graph, nature of relationships between tables and appropriately generates the right join and query structure for fairly complex questions automatically.

A machine learning system that is constantly learning from the choices made by users to give smart personalized results and suggestions.

A High-Performance Compute Engine

Our choice of search as the interface meant that user expectations around latency would also be very similar to what they get from Google or Amazon. We’re talking about seconds, not minutes. We wanted to solve the problem in a way that we could address use cases that scaled up to a hundred billion rows and a hundred terabytes of structured data. We were also certain that we could not rely on pre-aggregations like other solutions had leveraged because that is one of the fundamental reasons why any change in an analytics request leads to multiple weeks of effort.

We had one thing going for us: DRAM prices had fallen dramatically in previous years. We could contemplate a solution where we kept all the data in memory being economically viable.

With this assumption, we went ahead and benchmarked pretty much everything I could lay my hands on, including all the open sourced big data technology as well as some of the commercial solutions. I wanted a system where I could throw a few billion rows and ask a simple aggregation question like “total revenue for each state” and get back an answer in less than a second.

Nothing that existed came close to the performance I was looking for, even by an order of magnitude. The only thing, and I mean the only thing, that gave me that kind of performance, was if the data was already loaded in columnar format in memory and I wrote a hand optimized c++ program to compute the result, compiled it in optimized mode, and then ran the program. Even moving from c++ to Java dropped performance by 4x. Using any general purpose database or compute engine gave me ten to thousand times worse performance.

That is when we made a choice to build our own distributed in memory compute engine where data-structures will be prebuilt, and for executing a query, in real time, we will generate optimized c++ code, compile it and then run that code. A number of other JIT compilation engines have existed or since been built, but one key differentiator for us is that most database designers are worried about having a disk seek that will cost around 10-50 millisecond, while we are maniacally focused on things like branch mis-prediction that adds up 10 nanoseconds, L3 cache miss, which can cost 50 nanoseconds, and not inlined function calls that again cost a few nanoseconds. We had already assumed that we will never touch a disk or SSD while processing a query. This has resulted in dramatically superior results where we see more than an order or two of magnitude difference in query time for large datasets.

An Incredible Bet

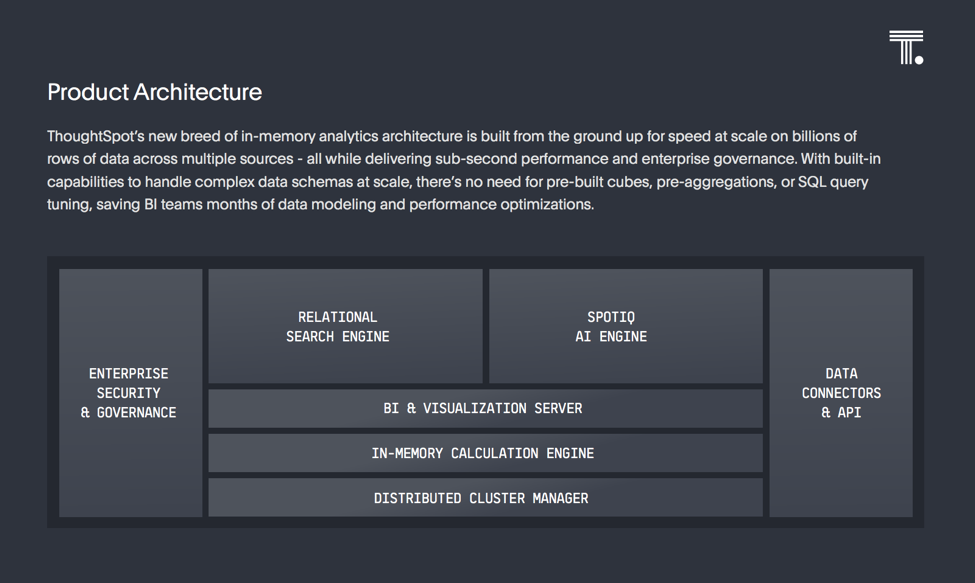

So, in the winter of 2012, with barely a 10 person engineering team and small amount of capital, we decided to take the red pill and build a platform that consisted of:

Search and Language Understanding Platform

An MPP in-memory compute engine that was going to be an order of magnitude faster than anything else that existed

A Distributed cluster management and containerization infrastructure, because Kubernetes was not invented yet, and other solutions did not meet our needs, and

Everything else that a modern analytics product is expected to do.

We had no idea how this was going to play out, but the results speak for themselves.

In every major industry if you look at the top 3 leaders at least one of them would be using ThoughtSpot in a mission critical way with seven figure investment.

One of our customers that is a household name in technology world had invested millions of dollars in a different in-memory database and was running usual dashboards on top of it and had dashboards that would take upwards of five minutes to load because of the volume of data. Since having deployed ThoughtSpot they report a 30x performance improvement.

Another customer, who simply could not analyze all their transactions at granular level was leaving hundreds of millions of dollars in price optimization before they could deploy ThoughtSpot.

So, what does this milestone means to me? It means incredible gratitude to the amazing builders who took the red pill with us and got us this far. It also means an incredible responsibility because we have an amazing BI platform and amazing start, but we are only 2% done.