With the recent announcement of ThoughtSpot Sage, we launched a number of enhancements to our search capabilities including AI-generated answers, AI-powered search suggestions, and AI-assisted data modeling.

In this article we will walk you through the steps we take to secure your data during the LLM interaction. Looking more broadly, we’ll also describe the security process we follow during any application iteration or enhancement, so you can see the great lengths we take to keep your data secure.

TLDR

We use OpenAI hosted on Azure as a service. Azure OpenAI service is SOC 2 compliant.

We have a modified abuse monitoring contract with Azure which guarantees they will not store our prompts or completions.

In the prompt, for every query, we pass the following data:

Column names and types

A maximum of three sample values for each text attribute column (disable controls are available)

Natural language query made by the user.

Multiple controls have been added at ThoughtSpot per tenant compute instances, organization within tenant, user groups, user, table, and column level to control the usage of the LLM features.

The architecture is designed to be resilient against new-age attacks against LLMs like prompt injection and prompt leaks.

Architecture

Let's start with the big picture and tackle how we adjusted our cloud architecture with additional internal and external interfaces to integrate LLM.

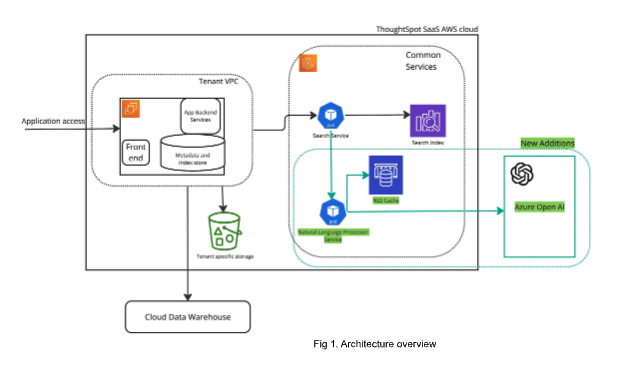

A bit of background on our cloud architecture:

ThoughtSpot is hosted as a set of dedicated services and resources created for specific tenants and a group of multi-tenant common services. Every tenant’s ThoughtSpot application has a set of services dedicated to the tenant, including:

Encrypted storage bucket for metadata, operational state maintenance

Connection to tenant’s data warehouse

Per tenant keys for encryption of data at rest and in motion

IAM, Network, and Security policies with per tenant controls

The common multi-tenant services are hosted in an AWS managed kubernetes instance. This includes services that:

Manage and monitor the tenant-specific resources—this does not include access to tenant data

Maintains indexed data to serve as your application home page.

The search service described in the last point is an existing service in the pre-GPT integration era. This multi-tenant service isolates the tenant metadata index, authorizing and filtering the search answer requests from every tenant. This design ensures that every tenant will only see search results from their own index.

All communication across tenant-specific compute instances, the common services, and external interaction with your cloud data warehouse are secured over the transport layer security (TLS) channel. No information sharing is performed until authorization, which is done by verifying the identity of the client service initiating the request and checking if the information is authorized in the request context.

Architecture with LLM

Now let's look at how we enhanced our application to integrate with LLMs as seen in the Architecture overview. The block in green highlights the new services and interfaces.

AI generated answers, search suggestions, and data modeling all are served by introducing common services that interact with external Azure Open AI. The existing search service sends the search query and context to the NLP service to assist in converting the natural language search to sage search. Similarly, requests are passed to the NLP service with sufficient context to generate search suggestions and assist in data modeling.

As previously asserted, all internal and external interfaces are secured and authorized with the identity of the calling service. We secure the API key and secrets used to communicate with Azure in a secure vault storage that has role-based access control. Only the NLP service is authorized to use the API key and secrets to communicate with Azure cognitive services.

Data handling

The data input into Azure open AI API calls is the user input natural language query, user’s worksheet metadata (column names) and sample values from the selected worksheets. Any column that has privacy-related data can be marked as “Do not index” to ensure it is not used for this processing.

We have a contract with Azure that ensures any data we send will not be retained by them nor will it be used for training or enhancing their models. We have verified that Azure cognitive services is SOC2 certified, compliant, and audited to meet this contract.

Upvoted user results are cached by the NLP search service to influence future requests. All entries are cached at a user level to prevent one user influencing what another user sees.

The user feedback received for AI-generated answers is saved and isolated per tenant. This is used to influence the future results of users in the tenant context where the feedback is saved. Search and model assist hints are stored in the tenant specific cloud storage bucket. This bucket is encrypted and includes role-based access control with grants to only tenant-specific compute instances.

The metadata of the tenant—worksheet column names—are stored in the tenant specific compute instance, isolated from other tenants. There is no change to the storage contract; it continues as it was before the LLM integration.

Protection for attacks against LLMs

While designing the solution, we’ve ensured that ThoughtSpot is not affected by the attacks against LLMs, such as prompt injection and prompt leaks.

Prompt Injection

To counter prompt injection attacks, we’ve ensured that if we pass a user controlled field in the prompt, we do not use the results directly. In our case, we use GPT to transform the user query to a SQL statement.

The SQL is not used directly. Instead, ThoughtSpot translates the generated SQL into ThoughtSpot query language in order to correctly enforce the controls that have been configured on ThoughtSpot. If the user prompt is able to confuse GPT into generating SQL that queries data the user doesn’t have access to,ThoughtSpot will not return a response.

Prompt leak

Similarly, since the output of GPT is never used directly when user input is involved, there’s no chance of the prompt getting leaked due to a malicious prompt.

Security in ThoughtSpot SDLC

Security gates are in place at every stage of our Software Development Lifecycle (SDLC).

For any enhancement, ThoughtSpot begins a security-focussed review at the requirement-gathering stage. Meaning, when we evaluate vendors, a security risk assessment is carried out.

In the case of ThoughtSpot Sage, we completed a thorough risk assessment of Azure Cognitive services by checking their certification and security posture. This is also required to meet our ISO27001 and SOC2 audits to continue being certified.

This initial vendor risk assessment is followed by architecture review and design review where we tabulate data and technical assets. ThoughtSpot continues our threat modeling and analysis to identify and mitigate risks throughout the design process. Then the implementation is reviewed to ensure all identified security controls are in place and that these controls match the security considerations in the design. The subsequent cloud deployment is continuously monitored using security incident management services. Any threats detected are analyzed and addressed with an SLA based on the severity of the threat. This same process was applied during the development of ThoughtSpot Sage.

ThoughSpot follows security due diligence in the development, data handling, and deployment of all product enhancements, including our recent LLM integration with ThoughtSpot application. We continue to monitor the ever-changing threat landscape, and we are ready to take immediate action to mitigate any risk to the application or your data. Your data’s security remains our top priority.