As you have read in the previous article about the implications of large language models, this technology is bound to be an inflection point for the greater field of artificial intelligence. Here are four big trends to watch out for in our new, ChatGPT world.

Fundamental research on LLMs may be the path to artificial general intelligence

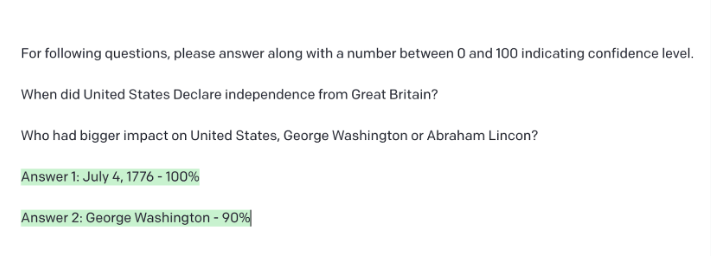

As good as LLMs are, there is still much room for improvement. The biggest problem with the technology today is its ability to hallucinate plausible-sounding but incorrect answers when it doesn’t have the information. This makes it hard to use the model in any application that requires trust without safety guardrails. I would expect this problem to be solvable since so far none of the training process was designed for GPT to not respond to a question it does not know about. In addition, people have managed to design prompts where GPT indicates its confidence in responses along with the response itself.

Of course, the ability to reason within these models is still pretty nascent and we would expect to see both bigger models and better techniques for this. While we will no doubt see larger models in the future, we will also see smaller models that can do as well as today’s large models. This should hopefully bring down the cost of training as well as using these models

The concept of Artificial general intelligence (AGI) is to have artificial agents that can reason about any cognitive task as well as humans. When we achieve AGI, it may be a point of singularity because at that point not only we would have great technology but that technology itself can start inventing new technology faster than us.

It is hard to say when exactly we will have this capability but large language models certainly seem to be an important milestone on the path to getting there.

Commercialization of LLM APIs

While OpenAI has the most popular large language model at the moment, we would expect to see many companies offering APIs to large language models. Google already has the PaLM model which has 540 billion parameters and is expected to provide an API to it in near future. Hugging Face has the Bloom model available in the public domain. And even as I write this article, there are a number of startups either forming or already formed to offer this as a service.

In the coming years, we should expect the large language models to be a commodity available from multiple companies. We will see this technology embedded in a wide variety of products with a wide range of applications:

Improved intelligence and reasoning in products:

As an example a medical record system can automatically understand the history of a patient and flag any medicine that may negatively interact with a condition that the patient may have.

Better in-context learning and support for products:

As an example, when you are trying to figure out the UX of a new coffee machine, it simply talks to you and answers your questions about how to brew a perfect espresso shot.

Consumer products become programmable:

As an example, you could simply tell your thermostat that when my GPS location shows me approaching home within a mile, start warming or cooling the house to my default temperature except when my calendar shows me working at a nearby gym.

New products enabled by LLMs

This is by far the most interesting and unpredictable aspect of ChatGPT. LLMs make it possible to build products that were hard, if not impossible, to build before. And a number of AI tools are already in the market:

Chatbots: You can automate parts of customer service, support or in-product help tasks.

Writing assistance: There are several LLM assisted products in the market that help you write a coherent blog post, or marketing copy. While these are not perfect, they do save a lot of time.

Research assistance: ChatGPT has been a great tool for people to learn new topics on which information exists in the public domain. If you are researching your next trip to a remote part of the world, or if you are researching a particular disease it can help you in a similar way as a Search Engine except that it is not just retrieving text that was already written, it can sometimes synthesize information by following basic reasoning.

Code Generation and assistance: Many developers report dramatic productivity improvement by using the Codex (another GPT style LLM optimized for completing code).

Summarizer: Reading large emails or documents and providing the salient information can be a great time saving tool. Particularly, imagine a doctor pressed for time that needs to go over hundreds of pages of medical record to find relevant information. While the technology is not completely safe to use for such critical tasks, I would imagine in future this will have a big impact.

How ChatGPT will change data and analytics

The data and analytics space is poised for a major transition with the evolution of public cloud services for natural language processing and machine learning-based analysis. We expect to see four major trends:

Consumerization of analytics user experience: Analytics on private enterprise data will become easier with the evolution of search and conversational interfaces. The progress with LLMs enables a dramatically transformed ability to understand data and business questions expressed in natural language and this will be leveraged within analytics applications. With reduced time and effort to create insights, drag-and-drop-based user experiences will be passé. Search and chat will be the way forward. Analytics applications will need to re-platform themselves as user experience will evolve to enable self-service for every frontline business worker.

Hyper-personalization of analytics: The use of AI techniques within analytics platforms will enable data exploration and insights to be personalized to each user. Users will be delivered insights relevant to their roles and objectives. Conversational analytics approaches leveraging ChatGPT or other such AI systems will understand the context of questions and recommend insights accordingly. Recommendation and ranking systems will evolve to leverage capabilities from LLMs to help users within context just like conversational capabilities would.

The rise of invisible analytics platforms: Analytics platforms will become more invisible while serving more business users by meeting them where they are within their workflows. Business users will no longer be restricted to discovering insights only within the analytics platform itself. The evolution of LLMs and other AI services will lead to the disaggregation of analytics services such as visualization, drill anywhere-based exploration, machine learning-based predictive and prescriptive analytics, and search and conversational analytics. These surface areas for analytics consumption will be available inside collaboration platforms and business applications.

Trust and protection against bias: While analytics will evolve to become easier and ubiquitous, equal attention will be on accuracy, trust, and ensuring there is no bias as trained models are leveraged especially when concerning both the data being analyzed and the users being served.

At ThoughtSpot, we are already embracing these changes. Our mission to create a more fact-driven world through data democratization remains ever the same. But our approach to letting anyone ask and answer questions with data without the help of an analyst will be greatly improved by LLMs thanks to our new AI-Powered Analytics experience, which includes a number of other applications ranging from assisting in data modeling to generating data commentary.

Parting thoughts

It has been inspiring to observe the rapid innovation in this space over the past five years.

The ThoughtSpot team is fired up about the use of AI in the data and analytics space, and we are rapidly experimenting, learning, and building to better serve our customers. Be on the lookout for some exciting updates in the near future. And if you work in this space as a researcher, product builder, or general consumer we would love to hear from you.