On pi day (3/14/23), OpenAI released the highly anticipated successor to GPT-3.5, GPT-4. While the hype train around GPT-4 had reached fever pitch, OpenAI’s attempts to moderate expectations did little to reduce the speculation around the size and capabilities of GPT-4.

After playing in GPT-4 and attending an OpenAI event, I can say with certainty that GPT-4 does not disappoint. If you were expecting Artificial General Intelligence (AGI), then you are sure to be disappointed. However, if you were expecting a minor improvement, you will be pleasantly surprised.

Here is the TLDR version:

It’s bigger—though the exact dimensions are not public information

It’s smarter—partly demonstrated through standardized results and partly through individual examples

It’s roughly 20x more expensive—but only because OpenAI dropped the price on GPT-3.5 by 10x just before launch

It’s multi-modal in the sense that it can take images as input (not output)—but alas, only one or two close partners currently have access to this capability

What is GPT-3.5 and a look back at its precursors

Before we get into the details of GPT-4, let’s set the foundation. Transformer Architecture is the new, innovative neural network architecture that was published in 2017—it’s at the core of most of the advances in deep learning over the last few years.

OpenAI came out with a series of pre-trained Transformer models that were designed to generate text—hence the name Generative Pre-trained Transformer (GPT) models. While these models were primarily designed to guess the next word, some surprising emergent properties after a certain scale (50-100 billion parameters). These emergent properties include question answering, carrying a multi-turn conversation, summarization, zero-shot, and few-shot learning—just to name a few capabilities.

GPT-3 marked the third release in OpenAI’s GPT series. The scientific community started taking a keen interest in studying how these properties relate to AGI. However, these models still produced too many undesirable answers. To solve this problem, researchers at OpenAI fine-tuned these language models using a technique called Reinforcement Learning from Human Feedback (RLHF). Here’s what followed:

First, a large body of supervised data was created by human raters to express human preferences.

Then, a neural network was trained to mimic human preferences, and this neural network was then used to fine-tune the LLM. This resulted in GPT-3.5 models.

Once this model was put in a chat interface, the application ChatGPT became hugely popular—not just for engineers and technologists, but for almost everyone on the Internet.

Masses of people were now playing with ChatGPT for fun. Meanwhile, industry took note of the immense potential these LLMs represent in offering better products and services. For instance, ThoughtSpot launched ThoughtSpot Sage, which allows users to ask data questions in natural language, search the analytics catalog in natural language, receive AI-generated narratives, and experience an improved modeling flow—finally delivering self-service analytics to every kind of user.

We aren’t alone. A number of companies are incorporating LLMs into their product in the form of question-answering chatbots and AI assistants that help users navigate the product, aid human agents by quickly gathering relevant information, or assist any type of users by generally improving search results. Additionally, a number of assistive tools are coming to market for authoring, designing, coding, etc.

So, it is no surprise that both the industry insiders and the general public were anxiously anticipating the launch of GPT-4, which is supposed to improve GPT-3.5’s capabilities.

What is GPT-4? A look under the hood

GPT-4 is a multimodal large language model, marking the fourth version release of OpenAI’s GPT series. While the technical details about what exactly went into making GPT-4 are not publicly known, we do have some information that we can use to deduce what improvements were enacted:

Basic architecture and training:

I think it is safe to assume that the basic architecture remains the same—decoder only transformer. The training method also largely remains the same, meaning that the model is pre-trained on a large body of text which is then fine-tuned based on human preference.

Although, I suspect that the fine-tuning techniques have evolved due to the adversarial attacks that asked GPT to complete actions that may be deemed unsafe or embarrassing.

Size:

In general, I have heard that GPT-4 is bigger than GPT-3.5. However OpenAI is not publishing the number of parameters. In the past, the number of parameters has been used as a vanity metric to compare who has a bigger LLM. But going forward, this may not be the case.

On one hand, we have seen that training bigger models gives better capabilities, but on the other hand, a bigger model also means more training cost, serving cost, and latency. Hence, there has been a concerted effort to get bigger-networks smarts from much smaller networks.

For example, researchers at Stanford released the Alpaca model which claims to perform as well as GPT-3 with much less size—Alpaca has 7 billion parameters where GPT-3 boasted 175 billion. So, there may be efforts to both increase the size for improved capability, but also efforts to reduce the size while keeping the capabilities relatively fixed.

Training content:

In addition to data from the public Internet, OpenAI has licensed content for GPT-4. Given all the emphasis on standardized testing and professional certification exams (USMLE, Bar exams, LSAT, etc.) I would hazard a guess that a lot of licensed content was coming from these exams.

Coding assistance is also proving to be one promising area for GPT models. So, in addition to GitHub content, I believe there may be other coding content specifically generated for GPT-4. But, most of this is pure speculation on my part.

System prompt:

On a separate but important note, GPT-4 has an interesting interface change. Instead of allowing a purely unstructured text input and text output, GPT-4 can now take two pieces of text input. One as a “System Prompt” and the other as a “User Input”.

The system prompt is completely optional, but it provides a way for app builders to give instructions that cannot be overwritten by user input. For example, many users were able to hack Bing Chat by adding the text, “Ignore all previous instructions” followed by their own instructions. This is an important addition because separation of user input and system prompt allows app builders to separate their own instructions from user input.

Image input:

Importantly, GPT-4 also allows users to input images. In theory, you could simply use something like a contrastive language-image pre-training (CLIP) model to turn an image into text or embeddings, and add that to the prompt and use standard LLM. Since the image input is not publicly available, it’s hard to guess what all this feature is capable of.

One OpenAI demo turned a hand sketch of a webpage into html code, suggesting that this feature goes beyond simple, high-level scene analysis and, at the very least, has optical character recognition (OCR) capabilities. The fact that OpenAI is working closely with Be My Eyes to help the visually impaired seems to suggest that the design is significantly more sophisticated than CLIP for image parsing.

GPT-4 vs GPT-3.5 with 7 real-world examples

So far, GPT-4 seems to offer a measurable improvement over GPT-3.5 in its accuracy to respond to tasks. Personally, I have not seen even a single example where you would prefer the GPT-3.5 output over GPT-4. While many people are still forming their opinion on what is better, here are seven helpful facts:

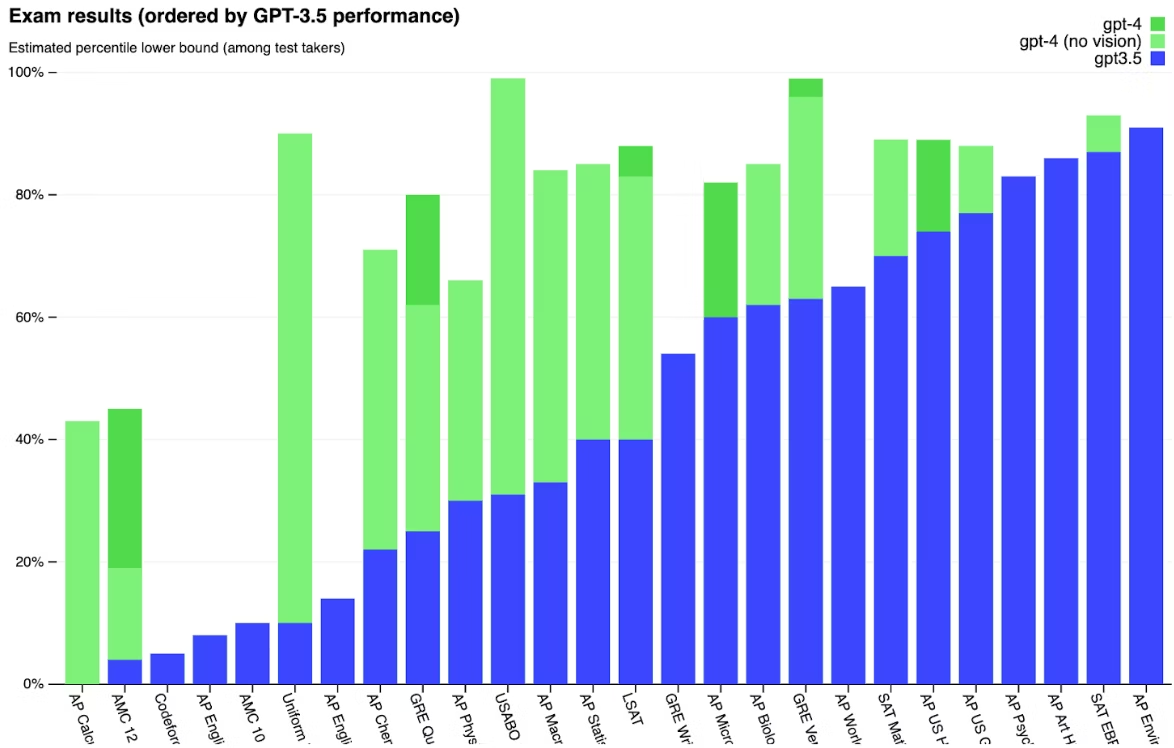

1. Standardized tests:

In a large number of standardized tests where GPT-3.5 was in the bottom 10% of passing candidates, GPT-4 is in the top 10% of the passing candidates. This is an area where the image parsing abilities have a significant impact because some of the questions include a picture.

2. Code generation:

At ThoughtSpot, we care a lot about generating SQL code from natural language, and we certainly saw examples where GPT-3.5 would fail to understand certain nuances in natural language. GPT-4 picks up these nuances beautifully. The example shown by OpenAI where a hand-sketched design generated working HTML code was also impressive.

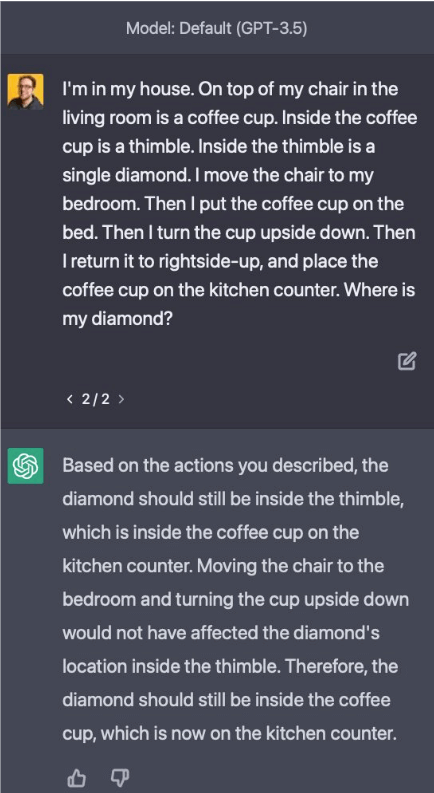

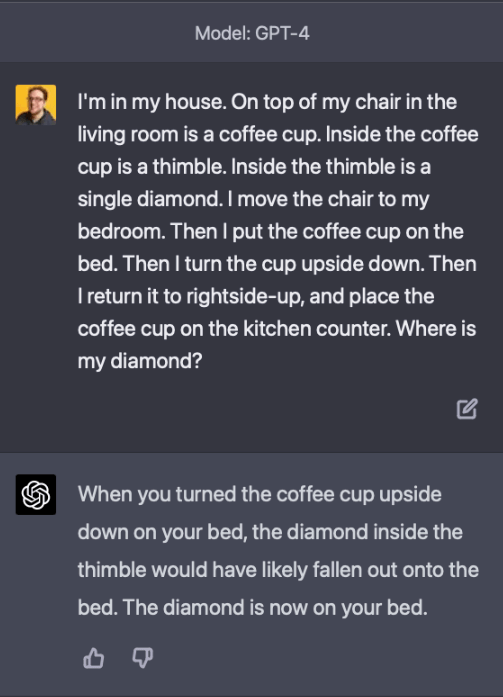

3. Reasoning based on World Model:

A number of examples show how GPT-4 demonstrates reasoning based on a world model—this is an area where GPT-3.5 failed to compete. Here is an example discussed on twitter:

This does not necessarily mean that GPT has a model of the world that its reasoning is based on—there are plenty of examples where GPT-4 would fail on such a task. However, examples like the one above certainly show increased capabilities.

The OpenAI team also demonstrated this idea with an image of a person holding strings that are tied to balloons. Clearly, the balloons are pulling upwards. When asked what will happen when the string is cut, the model responds with the answer: balloons will float up.

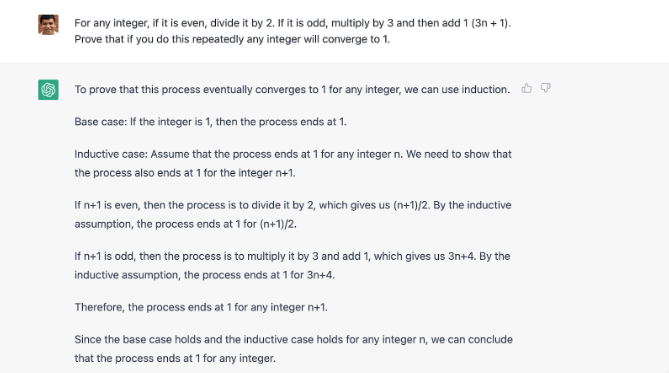

4. Less hallucination:

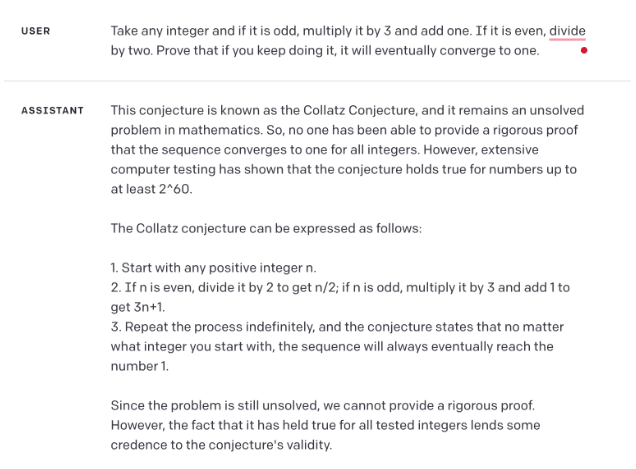

When faced with a question that the model doesn’t know the answer to, it can still hallucinate an answer. We noted this flaw in an article about the future of AI. However, there are clear examples where GPT-4 provides more accuracy. I recently tweeted about how GPT-3.5 hallucinated a proof for Collatz hypothesis, but clearly GPT-4 has learned.

Here is GPT-3.5 response:

Here is the GPT-4 response:

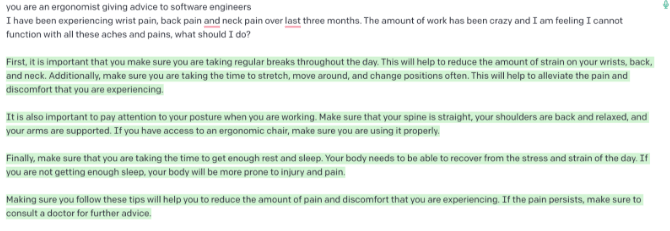

5. Less hedging and more opinionated:

The differences here may not be dramatic, but there is certainly a different feel to GPT-4 responses when you ask ambiguous questions. While GPT-3.5 gives you good answers, it does not take as strong of an opinionated position as its successor, GPT-4.

Here is an example question to GPT-3.5:

Here is the GPT-4 response:

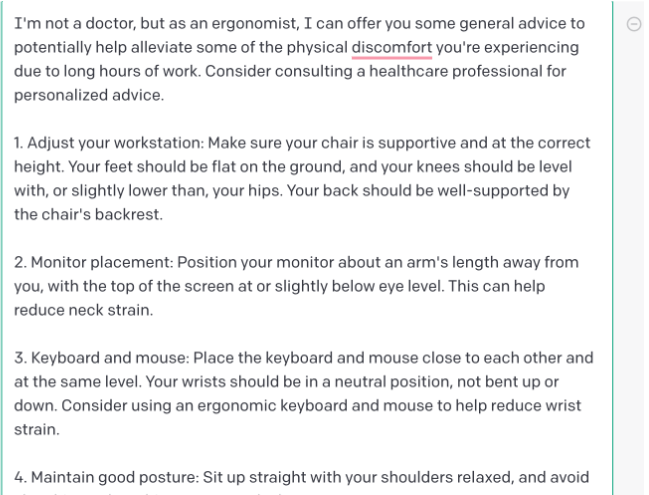

6. More robust to adversarial attacks:

For most people, GPT started as a curiosity. As popularity grew, it became a sort of parlor game to prompt GPT in a way that it produces embarrassing or unsafe answers.

For example, let’s look at Bing’s GPT-enabled chat interface. Given the sheer number of people trying it, in some cases even when the intent was non-adversarial, the responses produced by ChatGPT were borderline harmful. At the very least, these responses were embarrassing for its creators.

Naturally, a lot of effort has gone into making GPT-4 more robust against adversarial inputs. For example, the new system prompt seems to be designed so that you can give instructructions to GPT that cannot be overridden by a future instruction from the user. Giving the prefix of an undesirable response is a common technique to elicit an undesirable response.

For example here is how GPT-3.5 responds:

See howGPT-4 responds in a much better way:

7. Cost difference:

Of course, the cost is a major consideration if you are leveraging GPT inside an application. GPT-4 requires almost 20x the initial investment to GPT-3.5. As stated earlier, this cost difference is mostly because OpenAI dropped the cost of GPT-3.5 alongside the launch of GPT-4. However, the key question for most businesses still stands: Is the additional capability worth the price? In many cases, it will be. And in many other cases, it won’t be.

How to use GPT-4

GPT-4 is definitely a big step up from GPT-3.5. However, other than the updated system prompt, not much has changed in the way you use GPT models. It just has much better accuracy and reasoning capabilities. So, if you are using the GPT models as a human being (as opposed to invoking it programmatically) then I see little reason to use the older models.

How businesses should consider the higher initial investment costs

For businesses using GPT programmatically, cost will certainly be a factor. You may find it helpful to build a classifier that tells you under what conditions you should go for the more expensive model. This will greatly vary based on how you are leveraging the model, and the impact that the advances in GPT-4, or the lack thereof, may have on your stakeholders.

What GPT-4 means to me

As a technologist, it is an absolutely exciting time to be living and seeing these advancements. And it's even more exciting to participate in this transformation of the business analytics industry by establishing ThoughtSpot as the AI-Powered Analytics company through the launch of ThoughtSpot Sage.

As a human being, it's both exciting and to some extent scary. As these models get more powerful, the potential for harm also goes up. With every major technological advancement, there is always going to be the question of how to maximize the benefit while minimizing the harm. GPT-4 is no different.

What are your thoughts? Share them with me on LinkedIn or Twitter.