What is ETL (Extract, Transform, Load)?

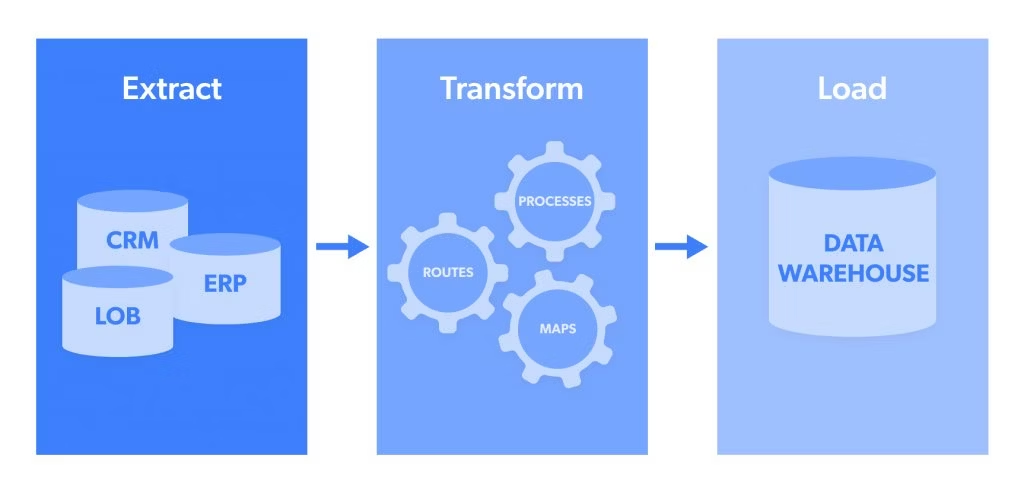

ETL (Extract, Transform, Load) is a process that involves extracting data from a source, transforming it to meet the requirements of the target destination, and then loading it into a said destination. It can even be used to migrate data from one database to another or even from one type of database to another. Various types of ETL tools like Hevo and approaches can be used depending on the specific situations as well. Data professionals need to be familiar with the ETL process in order to move data efficiently between different systems.

As mentioned, ETL stands for extract, transform, load.

Extract: The first step in ETL is to extract the data from its current location. This can be done manually or through an automated process.

Transform: The next step is to transform the data into the desired format. This may involve cleaning up the data, converting it to a different format, or performing some other type of transformation.

Load: The last step is to load the transformed data into its new destination. This can be done by importing it into a new database, file, or another type of data store.

What is an ETL pipeline?

An ETL data pipeline is a process for extracting data from one or more sources, transforming it into a format that can be used by downstream applications, and loading it into those applications. ETL pipelines are common in data warehousing and business intelligence applications, where they are used to extract data from transactional systems, transform it into a format that is suitable for analysis, and load it into data warehouses or business intelligence solution.

ETL pipelines can be complex, with multiple stages that must be executed in a specific order. For example, data extractors may need to run before transformers, and transformers may need to run before loaders. ETL pipelines can also be triggered by events, such as the arrival of new data in a source system.

ETL pipelines can be built using a variety of tools and technologies. Some ETL tools are designed to work with specific types of data sources or target applications. Others are more general-purpose, providing a framework that can be used to build ETL pipelines for a variety of purposes.

4 common ETL challenges and how to fix them

There are four common ETL challenges data professionals face. These include:

1. Data cleansing

Data cleansing, also known as data scrubbing, is the process of identifying and correcting or removing incomplete, incorrect, or irrelevant data. Data cleansing is a crucial step in ETL because the data quality determines the analysis's accuracy and reliability. One common challenge in data cleansing is dealing with missing or inconsistent data. The best strategy to overcome this challenge is to identify the source of the problem and fix it. For example, if the source data is missing critical information, try to obtain it from an alternate source or create a default value. If the data is inconsistent, use rules or algorithms to standardize it.

2. Data transformation

Data transformation is the process of converting data from one format to another. Data transformation can involve several operations, such as filtering, aggregation, and joining. One of the biggest challenges in data transformation is managing complex data relationships. To deal with this, developing a clear understanding of the data relationships and establishing a standard data model is crucial. This will help ensure that data transformation operations are consistent and produce accurate results.

3. Data loading

Data loading is the process of loading data into a target system or database. One common challenge in data loading is managing large volumes of data. To tackle this challenge, optimizing the loading process by using efficient algorithms and data structures is essential. Additionally, consider breaking the data into smaller chunks to load it in parallel, which can help reduce the time required to load the data.

4. Data management

This is the process of managing ETL data sources, transformation rules, and target systems. One common challenge in data management is maintaining data quality metrics over time. To deal with this challenge, establishing data governance policies, including data quality rules and monitoring processes. These policies can help ensure that the data remains accurate and consistent over time.

ETL tools can help with this, but it can still be a challenge to keep track of everything and ensure that processes are running smoothly.

Types of ETL tools

There are four main types of ETL tools:

Batch processing ETL tools

Batch processing ETL tools are designed to process data in large batches at scheduled intervals. Batch processing is ideal for organizations that do not require real-time access to data and can afford to wait for the data to be processed. Batch processing ETL tools are often used for data warehousing, data migration, and data consolidation.

Cloud-native ETL tools

Cloud-native ETL tools are ideal for organizations that require agility and flexibility in processing data in the cloud. Cloud-native ETL tools are designed to run in cloud environments such as Amazon Web Services (AWS), Microsoft Azure, or Google Cloud Platform. These tools are highly scalable and can process data from cloud-based sources.

Open source ETL tools

Open-source ETL tools are often used for data integration, data migration, and data warehousing. Open-source ETL tools are free and open to the public, making them highly accessible for small to medium-sized organizations with limited budgets. These highly customizable tools allow organizations to tailor them to their specific needs.

Real-time ETL tools

Real-time ETL tools are designed to process data in near real-time or real-time. These tools are ideal for organizations requiring immediate data access for real-time decision-making. Real-time ETL tools are often used for operational business intelligence, streaming analytics, and event processing.

Get more insights from your data

In today's business world, data is key to success. That's why having self-service analytics is essential for any company that wants to make the most of its data. ThoughtSpot enables everyone within an organization to limitlessly engage with live data once it completes the ETL process into a cloud data warehouse, making it easy to create personalized, actionable insights through live analytics. With ThoughtSpot, you can easily and quickly create reports and Liveboards from your data without relying on IT or even knowing SQL. If you're looking for an easy-to-use analytics solution to help you get more insights from your data, sign up for a ThoughtSpot free trial today!